(keine Einträge)

Karriere und Jobs

Für Hochschulabsolventen und Berufstätige

Projekte und Jobs für Studierende

Robot Motion Planning

Mensch-Roboter-Interaktion

Robot Control

Project-Typ: Forschungspraxis/Ingenieurspraxis (possible thesis extension) Application deadline: September 15, 2024

Efficient and fast manipulation is still a big challenge in the robotics community. Traditionally, generating fast motion requires scaling of actuator power. Recently, however, more attention has been paid to “additional ways” for mechanical energy storage and release in order to keep the actuator power requirements baseline lower (just enough to satisfy general manipulation requirements). To introduce “fast mode” manipulation energy could “be injected” from mechanical elements present in the system. It can be useful for tasks such as throwing or other explosive maneuvers.

Bi-Stiffness Actuation (BSA) concept [1] is the physical realization of the previously mentioned idea. There, a switch-and-hold mechanism is used for full link decoupling while simultaneously breaking the spring element (allowing controlled storage and energy release). Changing modes within the actuator (clutch engagement and disengagement) is followed by the impulsive switch of dynamics.

Students are expected to study and understand the physical and mathematical representations of developed concepts. Apply and gain an understanding of multi-DoF manipulator systems, their control, and classifications. Further, using the state-of-the-art simulation frameworks develop a codebase for its control. The work will be foundational for further research, thus the student is expected to follow best coding practices and document his work.

Requirements from candidates:

- Knowledge of Matlab, C++, Python

- Working skills in Ubuntu operating system

- Familiarity with ROS

- Robotics (Forward, backward dynamics and kinematics)

- Proficiency in English C1, reading academic papers

- Plus are:

- Knowledge of working with Gazebo/MuJoCo

- Familiarity with GIT

- DesignPatterns for coding

- Familiarity with Docker

- Googletest (or other testing framework)

[1] Ossadnik, Dennis, et al. "BSA-Bi-Stiffness Actuation for optimally exploiting intrinsic compliance and inertial coupling effects in elastic joint robots." 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2022.

To apply, you can send your CV, and short motivation to:

Supervisor M.Sc. Vasilije Rakcevic

Mechatronik

Pos-type: Forschungspraxis/Internship, possible thesis extention. Application deadline: October 15, 2024

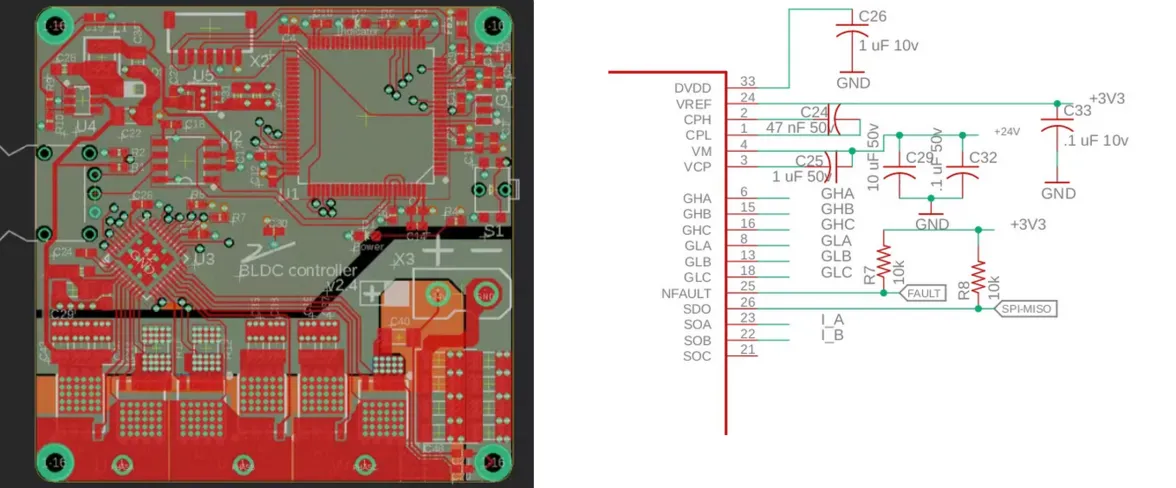

Brushless motors are growing in popularity for Robotics applications. In particular, due to their high power density, these motors can be used with smaller gear ratios to deliver the high torque and speed requirements. For example, the key to MIT mini cheetah's success was BLDC adaptation within the Proprioceptive Actuator concept [1].

The main challenge with usage of BLDC actuators is the more complex control scheme in comparison to other common motor types. It requires 3 phases to be coordinated in a specific manner to supply needed power. This concept is not new, one of the most popular mainstream approaches is FOC control for BLDC. However, importance should not only be placed on the design of control logic but also on the PCB to be able to deliver high power loads, receive & transmit signals with minimal noice, not overheat, etc.

Task of this thesis is to take existing PCB design and work towards achieving the following goals:

- Design update to increase the Currents capacity of the driver from 15A to 30A

- Introduce several additional PCB layers for more packed layout

- Study the possibility of incorporating novel ICs for more automated 3Phase gate switching, such as DRV8323SRTAT

- Investigate current sensing schemes to be adopted

- Decide on various stress testing for the PCB with the motor, see example of Thermal testing

- Create a stationary setup for testing

What you will gain:

- Hands-on experience and in-depth understanding of Brushless Motors, and their control

- Best practices for PCB Design and Project Collaboration

- Experience building, prototyping

- Hacking electronic signals (via oscilloscope, etc.)

- Insights in our System Development and access to our community

Requirements from candidates:

- Knowledge of Electronics/Power electronics

- Experience with PCB design and soldering

- Knowledge of one of the popular PCB Design software

- Basic Matlab skills

- Plus are:

- Understanding how Motors work

- Familiarity with GIT

- Embedded system programming, knowledge of C language

- Working skills in Ubuntu operating system

To apply, you can send your CV, and short motivation to:

Supervisor

M.Sc. Vasilije Rakcevic

[1] P. M. Wensing, A. Wang, S. Seok, D. Otten, J. Lang and S. Kim, "Proprioceptive Actuator Design in the MIT Cheetah: Impact Mitigation and High-Bandwidth Physical Interaction for Dynamic Legged Robots," in IEEE Transactions on Robotics, vol. 33, no. 3, pp. 509-522, June 2017, doi: 10.1109/TRO.2016.2640183.

Application deadline: October 20, 2024

Robotic solutions recently have been largely getting accustomed to the Direct-Drive actuation, involving lower gear ratios and BLDC motors. These solutions offer several advantages:

- Good back-drivability

- Joint torque sensing based on the motor currents

- Good torque density, due to the specificity of BLDC motor

An article introducing as they call “Proprioceptive” concept for actuation (Direct-Drive using BLDC) [1] (MIT Mini Cheetah-like robots have become a popular choice for a legged locomotion research system.)

However, Direct-Drive actuators have not become so popular for the Manipulator applications:

- Consuming big amount of power to compensate for gravitational loads when stationary

- Nature of manipulator control is not impact driven (as is the case for the legged robot walking), thus more traditional designs with additional torque sensors have good enough bandwidth for common manipulation tasks)

- Torque sensing is not required for adopted industry level manipulator applications

There are examples of the Manipulator robots that adopt direct drive actuators (of similar design to cheetah) https://rsl.ethz.ch/robots-media/dynaarm.html For example, to overcome overheating problems (power consumed constantly due to active gravity compensation), they adopt the active cooling methods.

Parallel elastic Actuator

Since the manipulator/or legs often require just relatively small operational range, idea is to utilize parallel spring in order to alleviate some of the load from the motor. Drawback is that for certain motion, beside overcoming the load required to move the robot, actuator needs to “fight” also spring force. However, if due to the spring choice, one can mostly assume that the main goal of the spring is full or partial compensation of the static loads, this scenario offers more advantages (we argue).

Research question

Advantage of this topic is that it has high relevance for the research (contribution): Control of the parallel elastic actuation to overcome the negative spring properties such as oscillation. Explore damping capabilities of the actuator and utilization of the spring for passive control.

What you will gain:

- Hands-on experience and in-depth understanding of Classical Robotics and various control methods

- Best practices for software development and collaboration

- Experience building, prototyping

- Insights in our System Development and access to our community

Requirements from candidates:

- Mechanical Engineering background

- Matlab skills

- Any CAD software for the Part designs (such as Solidworks, Fusion 360,etc.)

- Basic experience in C (embedded) programming

- Basic skills in Electronics

- Plus are:

- Understanding how Motors work

- Familiarity with GIT

- Working skills in Ubuntu operating system

To apply, you can send your CV, and short motivation to:

Supervisor

M.Sc. Vasilije Rakcevic

[1] P. M. Wensing, A. Wang, S. Seok, D. Otten, J. Lang and S. Kim, "Proprioceptive Actuator Design in the MIT Cheetah: Impact Mitigation and High-Bandwidth Physical Interaction for Dynamic Legged Robots," in IEEE Transactions on Robotics, vol. 33, no. 3, pp. 509-522, June 2017, doi: 10.1109/TRO.2016.2640183.

Brain Computer Interfaces

Dexterous manipulation

The goal of this 3-month Forschungspraxis/6-month thesis is to finetune OpenVLA[4] for multifingered grasping or manipulation tasks. The platform to be used for testing is a 7 DoF arm equipped with a multifingered hand.

Background:

The emergence of language models, such as GPT and Llama, has significantly enhanced the capabilities of robots by endowing them with common knowledge, thereby enabling them to address complex tasks in a more generalized manner. Vision language action model integrates language with perception and action. Existing works show that pretrained vision language action model has the ability to control multiple robots out of the box in an end-to-end way. OCTO[1] integrates state-of-the-art vision, language, and control models to enable robotic control via natural language commands. RT2[2] expresses actions as tokens and trains on a large-scale dataset to enhance robotic performance. VoxPoser[3] employs a modular algorithm that constructs a 3D value map by considering affordances and constraints, facilitating more precise control. OpenVLA[4] utilizes a fine-tuning approach, resulting in higher success rates across multiple tasks. In dataset of these models, the common end effector is two-finger gripper. This limitation restricts the models' ability to perform tasks of dexterous hand operations. Yuyang et al. [5] show dexterous has the ability to adapt to more complex robotic tasks.

Your tasks:

- Train and test OpenVLA

- Collect demonstration from a 7DoF arm and a multifingered hand

- Finetune OpenVLA model to fulfill multifingered grasping or manipulation tasks

- Implement the experiments on the real robots

Requirements:

- Knowledge of large language models (LLM) or foundation models

- Experiences or knowledge from related Robotic courses

- Knowledge of Pytorch or similar machine-learning library

- knowledge and experience in Git, Linux is a plus

Helpful but not required:

Experience with diffusion policy, reinforcement learning, MoveIt, ROS

Contacts:

Dr. Shuang Li (li.shuang(at)tum.de)

Contacting best before 30.09.2024

Location/s: Georg-Brauchle-Ring 60-62, 80992 München

References:

[1] Octo Model Team, Dibya Ghosh, Homer Walke, Karl Pertsch, Kevin Black, Oier Mees, Sudeep Dasari, Joey Hejna, Tobias Kreiman, Charles Xu, Jianlan Luo, You Liang Tan, Lawrence Yunliang Chen, Pannag Sanketi, Quan Vuong, Ted Xiao, Dorsa Sadigh, Chelsea Finn, & Sergey Levine. (2024). Octo: An Open-Source Generalist Robot Policy.

[2] Anthony Brohan, Noah Brown, Justice Carbajal, Yevgen Chebotar, Xi Chen, Krzysztof Choromanski, Tianli Ding, Danny Driess, Avinava Dubey, Chelsea Finn, Pete Florence, Chuyuan Fu, Montse Gonzalez Arenas, Keerthana Gopalakrishnan, Kehang Han, Karol Hausman, Alexander Herzog, Jasmine Hsu, Brian Ichter, Alex Irpan, Nikhil Joshi, Ryan Julian, Dmitry Kalashnikov, Yuheng Kuang, Isabel Leal, Lisa Lee, Tsang-Wei Edward Lee, Sergey Levine, Yao Lu, Henryk Michalewski, Igor Mordatch, Karl Pertsch, Kanishka Rao, Krista Reymann, Michael Ryoo, Grecia Salazar, Pannag Sanketi, Pierre Sermanet, Jaspiar Singh, Anikait Singh, Radu Soricut, Huong Tran, Vincent Vanhoucke, Quan Vuong, Ayzaan Wahid, Stefan Welker, Paul Wohlhart, Jialin Wu, Fei Xia, Ted Xiao, Peng Xu, Sichun Xu, Tianhe Yu, & Brianna Zitkovich. (2023). RT-2: Vision-Language-Action Models Transfer Web Knowledge to Robotic Control.

[3] Wenlong Huang, Chen Wang, Ruohan Zhang, Yunzhu Li, Jiajun Wu, & Li Fei-Fei. (2023). VoxPoser: Composable 3D Value Maps for Robotic Manipulation with Language Models.

[4] Moo Jin Kim, Karl Pertsch, Siddharth Karamcheti, Ted Xiao, Ashwin Balakrishna, Suraj Nair, Rafael Rafailov, Ethan Foster, Grace Lam, Pannag Sanketi, Quan Vuong, Thomas Kollar, Benjamin Burchfiel, Russ Tedrake, Dorsa Sadigh, Sergey Levine, Percy Liang, & Chelsea Finn. (2024). OpenVLA: An Open-Source Vision-Language-Action Model.

[5] Li, Y., Liu, B., Geng, Y., Li, P., Yang, Y., Zhu, Y., Liu, T., and Huang, S. 2024. Grasp Multiple Objects With One Hand. IEEE Robotics and Automation Letters, 9(5), p.4027–4034

Robotic hand modeling

Studentische Hilfskräfte (HiWi)

Andere Kategorien

Weitere Forschungsmöglichkeiten finden Sie an den Lehrstühlen unserer Principal Investigators!

Hinweis zum Datenschutz:

Im Rahmen Ihrer Bewerbung um eine Stelle an der Technischen Universität München (TUM) übermitteln Sie personenbezogene Daten. Beachten Sie bitte hierzu unsere Datenschutzhinweise gemäß Art. 13 Datenschutz-Grundverordnung (DSGVO) zur Erhebung und Verarbeitung von personenbezogenen Daten im Rahmen Ihrer Bewerbung. Durch die Übermittlung Ihrer Bewerbung bestätigen Sie, dass Sie die Datenschutzhinweise der TUM zur Kenntnis genommen haben.