(keine Einträge)

Projekte für Studierende

Type: Forschungspraxis/Semesterarbeit | Please apply before 01.11.2025

Development/Implementation of Reinforcement Learning based framework for contact-rich manipulation of a musculoskeletal hand model

Human has the capability of using our hand for dexterous contact-rich manipulation tasks such as grasping and fine manipulations. However, it still remains unclear how we plan and control our hand motions by exciting muscles through neuro signals, in particular, how the motor system manages muscle dynamics, redundancy, and tactile sensory feedback during complex manipulations. The goal of this project is to develop and re-implement the framework of the SoTA learning-based algorithms using MyoSuite models in MuJoCo. The study will also investigate the capability and limitation of these algorithms to gain insights into high-dimensional system planning. MyoChallenge 2024

Type: Forschungspraxis/Semesterarbeit

Requirements for Students:

Tasks:

- Review of the SoTA algorithms of RL or related methodologies for musculoskeletal models.

- Implementation of frameworks and scenarios of existing methods

- Exploration of performance and limitations of methods

Prerequisites that would be helpful:

- Basic knowledge of musculoskeletal modeling

- Knowledge of RL or related

- Python & MuJoCo & PyTorch

If you are interested in any of these topics and want to know more please get in contact

Contact

Junnan Li (junnan.li(at)tum.de)

Yansong Wu (yansong.wu@tum.de)

Deadline: Please apply before 01.11.2025

Proposed date: 06/10/2025

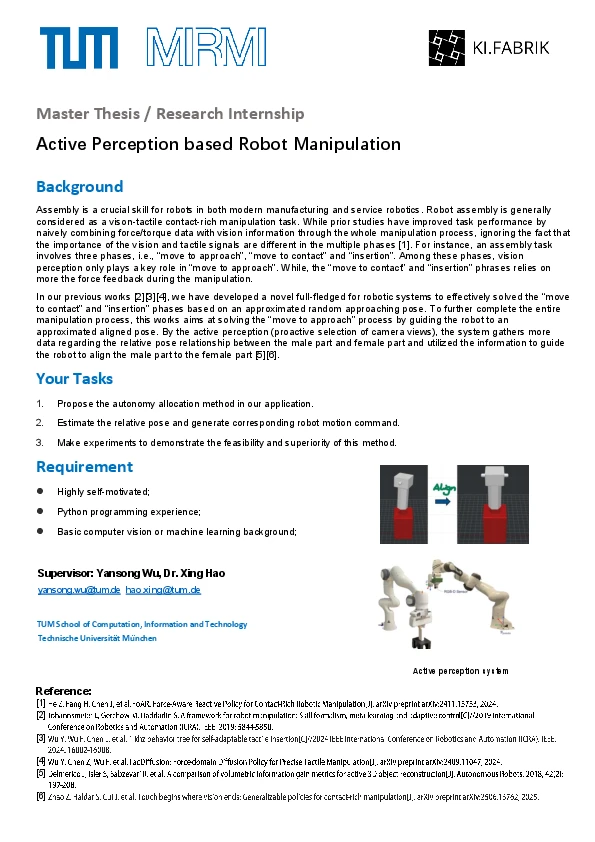

Background: Assembly is a crucial skill for robots in both modern manufacturing and service robotics. Robot assembly is generally considered as a vison-tactile contact-rich manipulation task. While prior studies have improved task performance by naively combining force/torque data with vision information through the whole manipulation process, ignoring the fact that the importance of the vision and tactile signals are different in the multiple phases [1]. For instance, an assembly task involves three phases, i.e., “move to approach”, “move to contact” and “insertion”. Among these phases, vision perception only plays a key role in “move to approach”. While, the “move to contact” and “insertion” phrases relies on more the force feedback during the manipulation. In our previous works [2][3][4], we have developed a novel full-fledged for robotic systems to effectively solved the “move to contact” and “insertion” phases based on an approximated random approaching pose. To further complete the entire manipulation process, this works aims at solving the “move to approach” process by guiding the robot to an approximated aligned pose. By the active perception (proactive selection of camera views), the system gathers more data regarding the relative pose relationship between the male part and female part and utilized the information to guide the robot to align the male part to the female part [5][6].

Your Tasks: 1. Propose the autonomy allocation method in our application. 2. Estimate the relative pose and generate the corresponding robot motion command. 3. Make experiments to demonstrate the feasibility and superiority of this method.

Requirement: ⚫ Highly self-motivated; ⚫ Python programming experience; ⚫ Basic computer vision or machine learning background;

Supervisor: Yansong Wu, Dr. Xing Hao yansong.wu@tum.de hao.xing@tum.de

Proposed date: 06/10/2025

Key words: VLA; Foundation Model; Contact-rich Manipulation

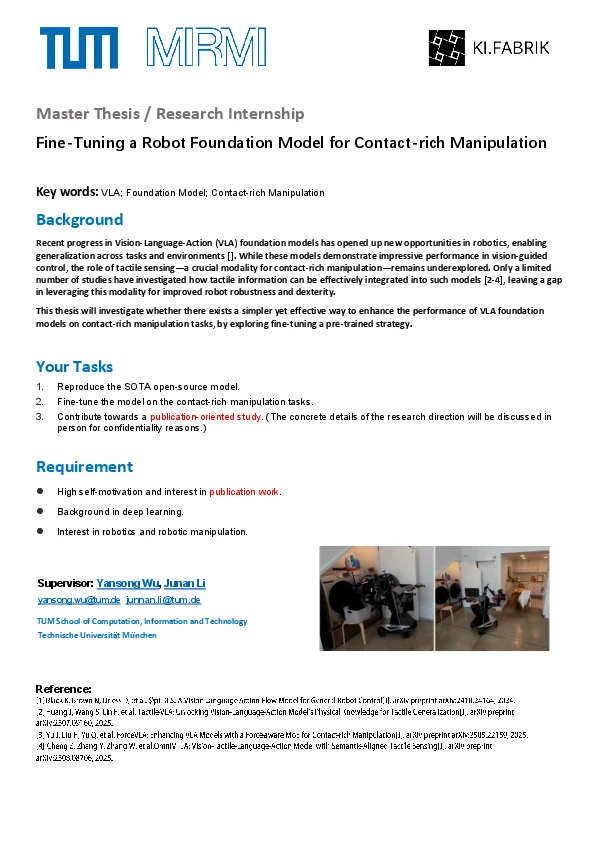

Background: Recent progress in Vision-Language-Action (VLA) foundation models has opened up new opportunities in robotics, enabling generalization across tasks and environments []. While these models demonstrate impressive performance in vision-guided control, the role of tactile sensing—a crucial modality for contact-rich manipulation—remains underexplored. Only a limited number of studies have investigated how tactile information can be effectively integrated into such models [2-4], leaving a gap in leveraging this modality for improved robot robustness and dexterity. This thesis will investigate whether there exists a simpler yet effective way to enhance the performance of VLA foundation models on contact-rich manipulation tasks, by exploring fine-tuning a pre-trained strategy.

Your Tasks: 1. Reproduce the SOTA open-source model. 2. Fine-tune the model on the contact-rich manipulation tasks. 3. Contribute towards a publication-oriented study. (The concrete details of the research direction will be discussed in person for confidentiality reasons.)

Requirement: ⚫ High self-motivation and interest in publication work. ⚫ Background in deep learning. ⚫ Interest in robotics and robotic manipulation.

Supervisor: Yansong Wu, Junan Li yansong.wu@tum.de junnan.li(at)tum.de

Fine-Tuning a Robot Foundation Model for Contact-rich Manipulation

Studentische Hilfskräfte (HiWi)

Weitere Forschungsmöglichkeiten finden Sie an den Lehrstühlen unserer Principal Investigators!

Hinweis zum Datenschutz:

Im Rahmen Ihrer Bewerbung um eine Stelle an der Technischen Universität München (TUM) übermitteln Sie personenbezogene Daten. Beachten Sie bitte hierzu unsere Datenschutzhinweise gemäß Art. 13 Datenschutz-Grundverordnung (DSGVO) zur Erhebung und Verarbeitung von personenbezogenen Daten im Rahmen Ihrer Bewerbung. Durch die Übermittlung Ihrer Bewerbung bestätigen Sie, dass Sie die Datenschutzhinweise der TUM zur Kenntnis genommen haben.